MongoDB单节点升级为副本集高可用集群

项目背景

由于历史原因,我们有一个作数据同步的业务,生产环境中MongoDB使用的是单节点。但随着业务增长,考虑到这个同步业务的重要性,避免由于单节点故障造成业务停止,所以需要升级为副本集保证高可用。

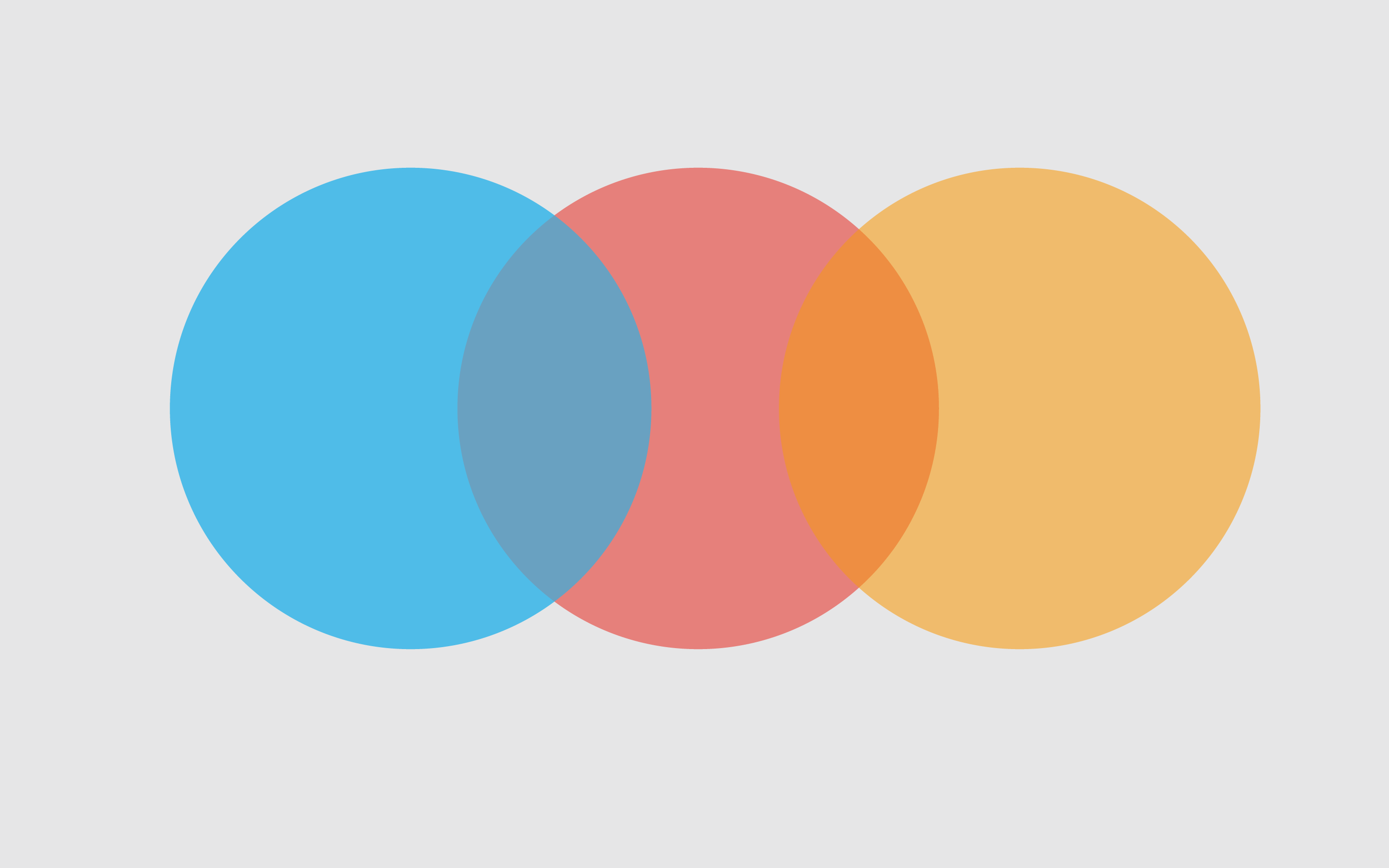

副本集架构

下面这架构图是这篇文章需要实现的MongoDB副本集高可用架构:

升级架构前注意事项

在生产环境中,做单节点升级到集群前,一定要先备份好mongodb的所有数据,避免操作失误导致数据丢失。

并且在保证在升级期间不会有程序连接到MongoDB进行读写操作,建议停服务升级,且在凌晨业务低峰期,进行操作。

一、原单节点MongoDB配置信息

IP: 192.168.30.207 |

1.1 原配置文件

systemLog: |

1.2 在原来配置文件增加副本集配置

replication: |

注意:这里需要先把 认证 配置注释,副本集配置完成后再开启。

二、新增节点信息

| 角色 | IP | Port | |

|---|---|---|---|

| PRIMARY | 192.168.30.207 | 27017 | 原单节点MongoDB |

| SECONDARY | 192.168.30.213 | 27017 | 新增节点1 |

| SECONDARY | 192.168.30.214 | 27017 | 新增节点2 |

2.1 新增节点配置文件

这两个SECONDARY节点配置文件,只需复制PRIMARY节点配置文件,并修改相应的 “bindIp“即可。

- SECONDARY 节点1配置文件

systemLog: |

- SECONDARY 节点2配置文件

systemLog: |

2.2 启动3个节点

PRIMARY节点需要重启,2个SECONDARY节点直接启动。

#启动命令 |

三、初始化副本集

使用mongo shell连接到其中一个节点,执行初始化命令

3.1 初始化配置

config = { |

3.2 对副本集进行初始化

> rs.initiate(config) //初始化副本集 |

3.3 查看副本集状态

- 查看集群状态信息

rs1:PRIMARY> rs.status() |

- 查看延时从库信息

rs1:PRIMARY> rs.printSlaveReplicationInfo() |

- 查看集群与主节点

rs1:PRIMARY> rs.isMaster() |

四、副本集开启认证

4.1 通过主节点添加一个超级管理员账号

注意:如果原先单节点mongo已经有了超级管理员账号,这可以忽略这个步鄹。

只需在主节点添加用户,副本集会自动同步主节点上的数据。

需要注意的是,创建账号这一步需要在开启认证之前操作。

- 创建超管账号

超管用户: mongouser 密码: 123456 认证库: admin

$ mongo --host 192.168.30.207 --port 27017 |

- 查看已创建的账号

rs1:PRIMARY> use admin |

4.2 创建副本集认证的key文件

所有副本集节点都必须要用同一份keyfile,一般是在一台机器上生成,然后拷贝到其他机器上,且必须有读的权限,否则将来会报错。

一定要保证密钥文件一致,文件位置随便。但是为了方便查找,建议每台机器都放到一个固定的位置,都放到和配置文件一起的目录中。

- 生成

mongo.keyfile文件

$ openssl rand -base64 90 -out /home/server/mongodb/conf/mongo.keyfile |

- 拷贝

mongo.keyfile文件到另外2个节点相同目录下

$ scp /home/server/mongodb/conf/mongo.keyfile root@192.168.30.213:/home/server/mongodb/conf/ |

4.3 修改MongoDB配置文件,开启认证

- 在配置文件中添加、修改如下配置。

security: |

- 重启所有的mongo节点

4.4 验证副本集认证

使用用户名、免密、认证库登录MongoDB副本集主节点

$ mongo -u mongouser -p 123456 --host 192.168.30.207 --port 27017 -authenticationDatabase admin |

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 DevOps Technology Stack!

评论